Foundation

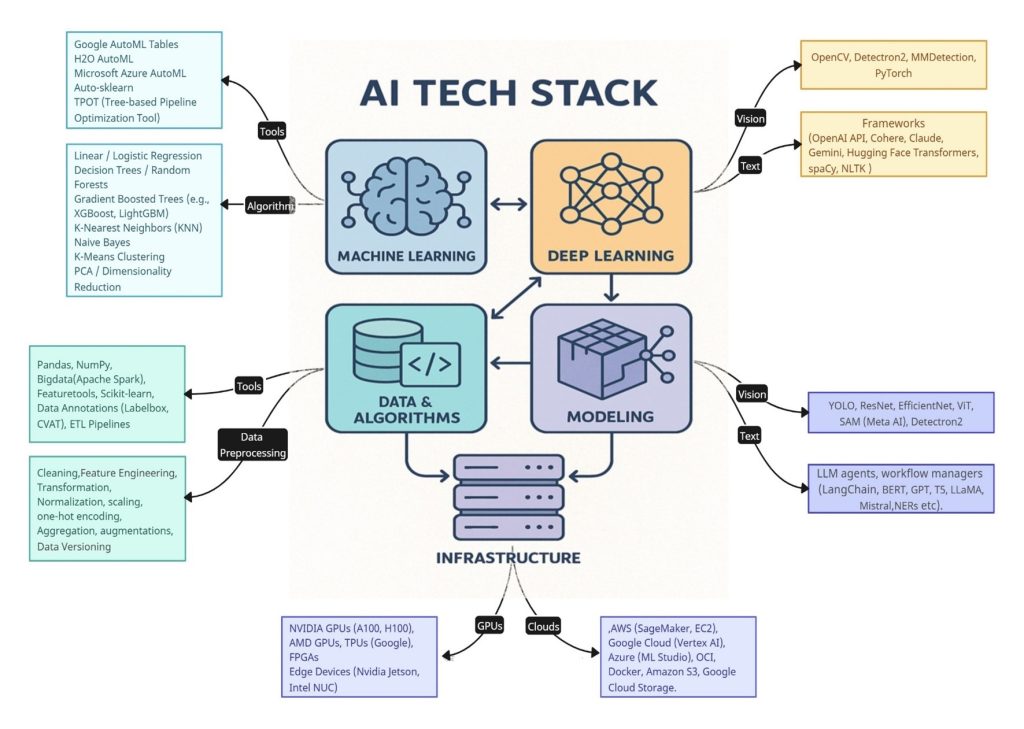

In today’s AI-driven world, choosing the right tools and frameworks isn’t just a preference — it’s the difference between building something smart and building something that truly works. Whether you’re deploying a computer vision system, building a recommendation engine, or launching an LLM-powered agent, a well-structured AI tech stack is essential.

The AI stack is a structural framework comprising interdependent layers, each serving a critical function to ensure the system’s efficiency and effectiveness. AI stack’s layered approach allows for modularity, scalability, and easy troubleshooting. Artificial Intelligence isn’t powered by a single technology or tool—it’s an ecosystem. Just like modern software runs on stacks of interconnected tools, AI development follows a layered architecture known as the AI tech stack.

A Proven Six Layer Architecture

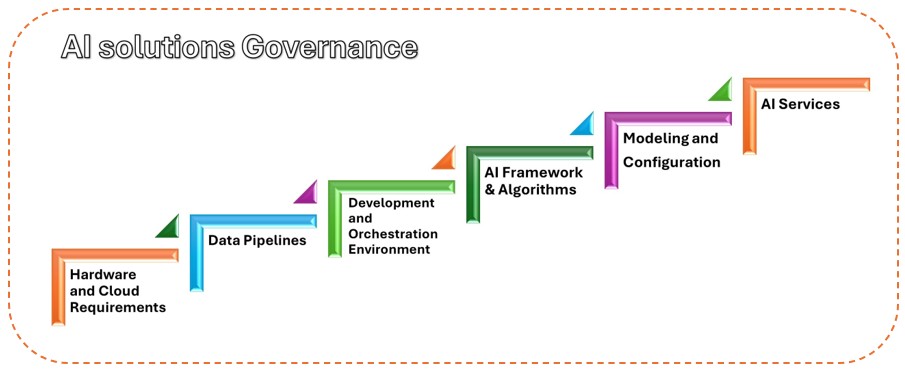

AI Solutions Governance: For Responsible Decision Making

Robust governance isn’t the final step in the process; it’s the foundation upon which the entire AI stack is built. It’s a framework of principles that ensures every decision, from data collection to model deployment, is made responsibly. For us, this is a moral and ethical obligation that defines how we build technology. Our governance model stands on three core pillars:

- Ethical Design and Fairness

- Responsibility and Explainability

- Security and Lifecycle Management

Based on this Governance Model, underlines the SIX- LAYERED Architecture for AI Solution development.

1. Infrastructure & Platforms

Core Elements:

- GPUs/TPUs, cloud services, and hardware accelerators

- Hybrid-cloud orchestration

- Distributed architectures, caching, low-latency storage

- Edge Devices & Specialized Chips (e.g., Jetson, FPGAs)

This matches the scholarly emphasis on compute scalability and telemetry. For instance, IBM’s hybrid AI architecture, underscores the synergy between specialized chips, cloud telemetry, and performance profiling.

2. Data Pipelines & Cataloguing

Effective AI relies on robust data pipelines: ingestion, annotation, cleaning, features, transformation, lineages, governance.

Advanced patterns like data mesh—decentralizing pipeline responsibility across domain-aligned teams—are gaining attention in engineering research for improved scalability and collaboration.

3. Development and Orchestration Environment

This layer is the central workbench and control tower for your entire AI lifecycle. It’s an integrated environment that sits on top of the Hardware and Cloud Foundation, providing data scientists and ML engineers with the tools they need to build, train, deploy, and manage models systematically.

4. Foundations of AI Frameworks & Algorithms

The “AI framework” layer houses libraries such as TensorFlow, PyTorch, and Scikit-learn. These follow toolbox design patterns, where components support diverse workflows—from preprocessing to model optimization. Such patterns were analyzed in depth in arXiv’s study on ML toolbox design.

5. Modeling Layers: Deep Learning & Agents

Vision & Network Models

Architectures like ResNet, Vision Transformer, YOLO, U-Net, and SAM reflect task-specific structure and parametric tuning.

NLP & LLMs

Transformer-based models (BERT, GPT, T5, LLaMA, Mistral) are foundational.

Attention Is All You Need,” a landmark paper introducing Transformer models, remains one of the most cited works in the past decade.

Agent Orchestration

Multi-agent workflows (e.g., LangChain, AutoGen) are being formulated into higher-order patterns that embed reasoning steps into orchestration pipelines.

6. AI Services & Solutions

These include LLM-powered APIs, chatbots, vision services, and multi-agent orchestrators. Business-focused analyses demonstrate a trend towards identifying observation and orchestration layers in enterprise AI—distinguishing core inference from prompt/tool chaining and monitoring.

AI Frameworks – Multi Device Support

AI frameworks serve as the mid-layer glue between infrastructure (hardware/accelerators) and higher-level model development. They abstract tensor operations, gradient flows, and model definitions—allowing researchers and developers to focus on experimentation and deployment.

The three most widely adopted frameworks are PyTorch, TensorFlow, and Keras.

| Feature | TensorFlow | PyTorch | Keras |

|---|---|---|---|

| Development | Developed by Google | Developed by Facebook's AI Research (FAIR) | Developed by François Chollet (at Google) |

| Type | Deep learning framework | Deep learning framework | A high-level API that runs on top of TensorFlow, PyTorch, and JAX. This highlights its modern, framework-agnostic nature. |

| Ease of Use | Can be complex, especially for beginners | Known for simplicity and ease of use | Extremely user-friendly and simple |

| Community | Large community and extensive resources | Growing community and active development | Established community and extensive resources |

| Performance | High performance, optimized for distributed training | High performance, suitable for research and production | Competitive performance, optimized for TensorFlow backend |

| Deployment | Strong support for production deployment | Deployment capabilities are improving | Easily deployable with TensorFlow Serving and TensorFlow Lite |

| Use Cases | Wide range of deep learning applications | Wide range of deep learning applications | Versatile, supports image recognition and NLP |

| Popularity | Widely used in industry and research | Increasing popularity, especially in research | Highly popular, especially with TensorFlow integration |

Research Trends:

- PyTorch 2.0 introduced TorchDynamo, AOTAutograd, and Inductor, closing the performance gap with XLA (TensorFlow).

- TensorFlow is pivoting to support multi-language ML pipelines, TFX, and Edge AI.

- Keras is evolving into a framework-agnostic interface with backends for JAX, PyTorch, and TF under the Keras Core initiative

Framework & Patterns We Trust

AI solution is a carefully designed tech stack — a set of tools and practices that keep everything running smoothly, here’s how we design AI systems we trust :

1. Collecting and Preparing the Right Data

Why it matters:

AI learns from data — the better the data, the better the results.

What we do:

- Capture real-time videos or images from cameras or online sources.

- Label and organize this data (for example, telling the system “This is vehicle” or “this is safe”).

- Keep track of every version so we always know what the system learned from.

In real life:

Like teaching a child by showing thousands of examples — but being very careful to store and remember every lesson properly.

2. Training/Tuning the AI Model

Why it matters:

This is where the AI learns to “see,” “understand,” or “predict” things from data.

What we do:

- Use proven frameworks (like the brain of the AI) to teach it how to detect objects, recognize faces, or understand behavior.

- Improve its understanding by training it on examples, just like coaching a player.

- Track experiments to see which method works best.

In real life:

Like training a dog to recognize voices or gestures — you try, reward, and refine until it performs well.

3. Quality Assurance and Testing

Why it matters:

Before AI is trusted in the real world, it needs to pass the test — not just once, but every time.

What we do:

- Test models on unseen, real-world scenarios to ensure reliability.

- Simulate edge cases like bad lighting, motion blur, or occlusion.

- Validate performance across devices — from cloud servers to embedded boards.

In real life:

Like crash-testing a car before it hits the road — safety comes from preparation, not hope.

4. Learning from Patterns & Monitoring in the Real World

Why it matters:

AI shouldn’t just react to the moment — it should recognize trends, adapt, and keep improving.

What we do:

- Use memory-based models that understand sequences, not just snapshots (like identifying patterns).

- Spot unusual patterns by learning what’s “normal.”

- Track how the AI behaves in the real world — its accuracy, speed, and even hardware performance.

- Retrain it when new data arrives or when it starts missing the mark.

In real life:

Like a veteran aircraft mechanic who not only performs routine maintenance — but can tell from a slight change in the engine’s hum.

5. Making It Easy to Deploy and Scale

Why it matters:

The same AI should work on a single camera or across an entire city.

What we do:

- Package AI models into portable “containers” so they run anywhere — from tiny boards to cloud platforms.

- Automate testing and updates to avoid manual mistakes.

- Build systems that are secure, reliable, and scalable.

In real life:

Like designing a power bank that works across every phone, not just one.

Conclusion

A great AI system isn’t just a smart algorithm — it’s an ecosystem built for clarity, reliability, and trust. Every part of our tech stack — from data and models to deployment and monitoring — is designed with human-level accountability in mind.

We call it the AI Stack We Trust, because it delivers what matters most: clarity, performance, and peace of mind — whether you’re building on the cloud or deploying AI at the edge.

“AI should never be just smart — it should be responsible.”